The hypothesis of closed-loop perception (CLP)

CLP thus suggests that perception of the external environment is a process in which the brain temporarily ‘grasps’ external objects and incorporates them in its motor-sensory-motor (MSM) loops. Such objects become virtual components of the relevant loops, hardly distinguishable, as long as they are perceived, from other components of the loop such as muscles, receptors and neurons. What primarily distinguishes external objects from body parts are inclusion duration and state; short and transient inclusions mark external objects while long and steady inclusions mark body parts.

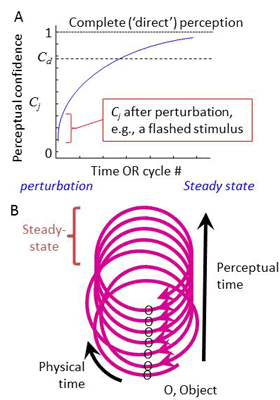

Interestingly, the perceptual dynamics suggested by this hypothesis reconciles a conflict between objective scientific observations and the subjective everyday experience of perceiving objects with no mediation. Everyday perception of a given external object, CLP suggests, is the dynamic process of inclusion of its features in MSM-loops. This process starts with a perturbation, internal or external, and gradually converges towards a complete inclusion - approaching, although never reaching, a state of “direct” perception. A laboratory-induced flashed stimulus, according to this model, probes the initiation of a perceptual process, whereas dreaming and imagining evoke internal components of the process.

Relevant papers

-

(2016). Perception as a closed-loop convergence process. eLife. 5.

Principles of mammalian active sensing

Active vibrissal touch

Active vision

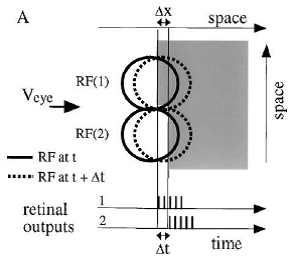

However, evidence has accumulated to indicate that fixational eye movements cannot be ignored by the visual system if fine spatial details are to be resolved. We argue that the only way the visual system can achieve its high resolution given its fixational movements is by seeing via these movements. Seeing via eye movements also eliminates the instability of the image, which would be induced by them otherwise.

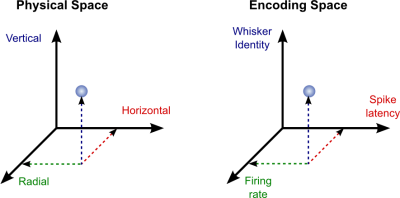

Here we present a hypothesis for vision, in which coarse details are spatially-encoded in gaze-related coordinates, and fine spatial details are temporally-encoded in relative retinal coordinates. The temporal encoding presented here achieves its highest resolution by encoding along the elongated axes of simple cell receptive fields and not across these axes as suggested by spatial models of vision. According to our hypothesis, fine details of shape are encoded by inter-receptor temporal phases, texture by instantaneous intra-burst rates of individual receptors, and motion by inter-burst temporal frequencies.

We further describe the ability of the visual system to readout the encoded information and recode it internally. We show how reading out of retinal signals can be facilitated by neuronal phase-locked loops (NPLLs), which lock to the retinal jitter; this locking enables recoding of motion information and temporal framing of shape and texture processing. A possible implementation of this locking-and-recoding process by specific thalamocortical loops is suggested. Overall it is suggested that high-acuity vision is based primarily on temporal mechanisms of the sort presented here and low-acuity vision is based primarily on spatial mechanisms.

Processing of sensory data acquired via eye movements requires the encoding of eye movements in the visual system. We hypothesize that this is achieved at a high resolution by the symmetric simple cell receptive fields (ssRFs) identified in the visual cortex. Based on empirical data ssRFs are assumed to be tuned for responding to movements along their long axis at typical FeyeM speeds (~1 – 10 deg/s, orange), and to movements across their long axis at lower or higher velocities (black) (the inh-to-ex delays corresponding to a single-cone flank of 0.5 arcmin are denoted below the speed values).

Relevant papers

-

(1997). Seeing through miniature eye movements: A hypotheses. Neuroscience Letters. S2.

-

(2001). Figuring space by time. Neuron. 32:185-201. (Review)

-

(2008). Object localization with whiskers. Biological Cybernetics. 98:449-458. (Review)

-

(2008). 'Where' and 'what' in the whisker sensorimotor system. Nature Reviews Neuroscience. 9:601-612.

-

(2009). Orthogonal coding of object location. Trends In Neurosciences. 32:101-109. (Review)

-

(2012). Motor-Sensory Confluence in Tactile Perception. Journal Of Neuroscience. 32:14022-14032.

-

(2016). On the possible roles of microsaccades and drifts in visual perception. VISION RESEARCH. 118:25-30.

-

Code transformations

Temporal code to rate code transformation by neuronal phase-locked loops

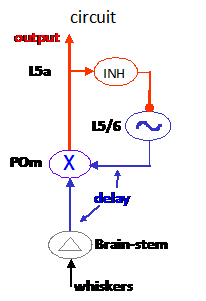

However, during subsequent stimulus cycles thalamic neurons are expected to shift into a gating mode, in which the same sensory activation is not sufficient for activating the thalamic neurons, i.e. additional depolarization is required. Such a depolarization can be induced by the other major input to these thalamic cells – the cortical feedback.

Thus, during on-going stimulations POm neurons, like other thalamic “relay” neurons, are assumed to function as AND gates, i.e. they should be active only when their two major inputs, from the brainstem and cortex, are co-active. When in this mode, the entire thalamocortical loop should function as a phase-locked loop.

Relevant papers

-

(1997). Decoding temporally encoded sensory input by cortical oscillations and thalamic phase comparators. Proceedings Of The National Academy Of Sciences Of The United States Of America. 94:11633-11638.

-

(1998). Temporal-code to rate-code conversion by neuronal phase-locked loops. Neural Computation. 10:597-650.

-

(2000). Transformation from temporal to rate coding in a somatosensory thalamocortical pathway. Nature. 406:302-306.

-

(2000). Transformation from temporal to rate coding in a somatosensory thalamocortical pathway. Nature. 406:302-306.

-

(2003). Closed-loop neuronal computations: Focus on vibrissa somatosensation in rat. Cerebral Cortex. 13:53-62.

-

(2015). Thalamic Relay or Cortico-Thalamic Processing? Old Question, New Answers. CEREBRAL CORTEX. 25:845-848.

-

(2015). Coding of Object Location in the Vibrissal Thalamocortical System. Cerebral Cortex. 25:563-577.